Lambda's Superintelligence Cloud: Building AI Infrastructure for a Post-AGI World

Stephen Balaban's Lambda is betting billions that AI will need radically different infrastructure — and that "one person, one GPU" is the future of computing

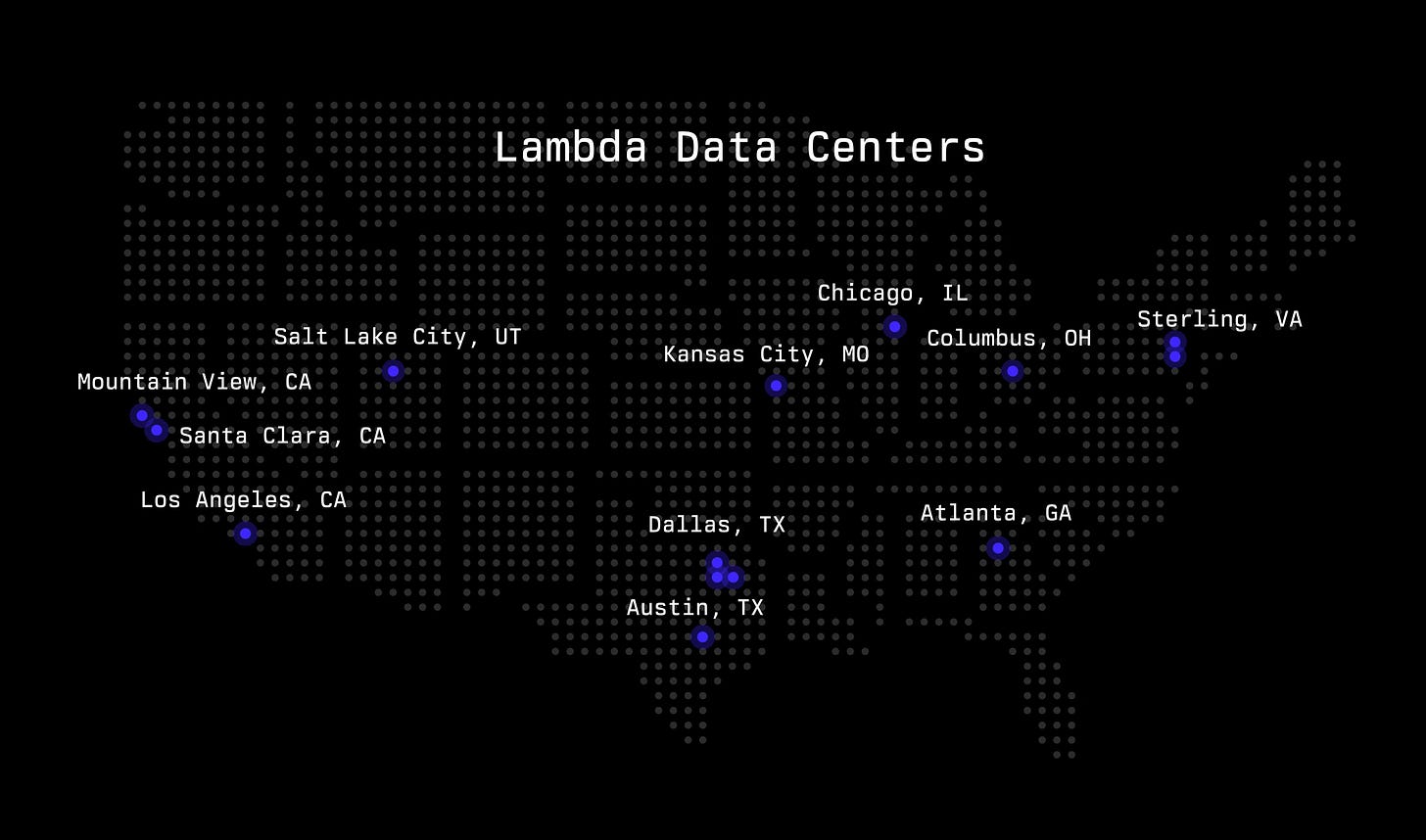

Lambda calls itself "The Superintelligence Cloud" — a bold claim for a company competing against trillion-dollar tech giants. Yet when Stephen Balaban explained his vision to Bloomberg Technology in February 2025, the numbers suggested this wasn't mere Silicon Valley hyperbole. The company had just raised $480 million at a $2.5 billion valuation, generated over $400 million in revenue while maintaining profitability, and deployed more than 25,000 GPUs across gigawatt-scale data centers.

"We are building a long-lived iconic company that is going to be here for the next hundred years," Balaban declared, framing Lambda's mission in civilizational terms.1 The company's tagline — making AI computation "as effortless and ubiquitous as electricity" — reveals ambitions that extend far beyond competing on price with AWS.2

Founded in 2012 as a facial recognition API company,3 Lambda has evolved into something unprecedented: a pure-play AI infrastructure provider that believes the entire cloud computing paradigm needs to be rebuilt for the age of artificial intelligence. While hyperscalers add AI capabilities to their general-purpose platforms, Lambda is building infrastructure exclusively for a world where, as Balaban envisions, "every man, woman, and child on the planet" has their own GPU.4

"This is the largest technological revolution we've seen in our lifetime," Balaban told Bloomberg's Caroline Hyde, "and it is completely fundamentally reshaping the way humans interact with computers."5

"In the future, you won't need any software at all. The neural network will completely replace it." - Stephen Balaban

The Architecture of Superintelligence

Lambda's infrastructure philosophy starts with a radical premise: traditional cloud computing is obsolete for AI workloads. The company has deployed over 1.7 gigawatts of contracted power — enough to run a small city — exclusively for AI computation.6 Every design decision, from networking topology to cooling systems, assumes a future dominated by massive neural networks.

"Unlike other clouds and other system providers, we focus on just this one particular use case, which is deep learning training," Balaban explained. "Customers need to ask themselves if they really need the gold-plated datacenter service experience of having Amazon Web Services be their operations team managing the infrastructure, because that is very expensive."7

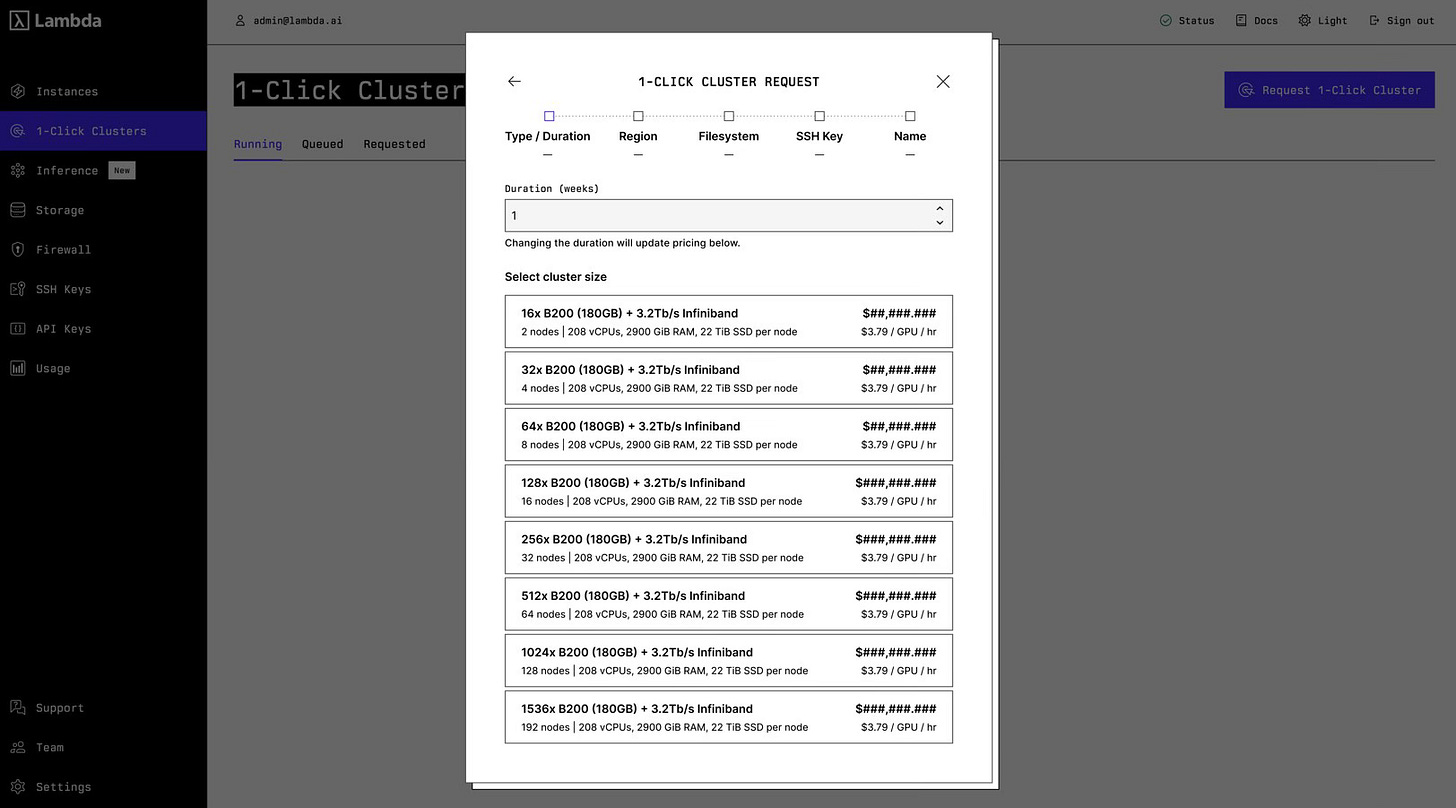

This specialization enables Lambda to offer NVIDIA H100 GPUs starting at $2.49 per hour on-demand, with committed usage rates as low as $1.85 per hour.8 The company's newest B200 GPUs — featuring 180GB of HBM3e memory and 8TB/s bandwidth — are available for $3.79 per hour on-demand or as low as $2.99 per hour with commitments. For comparison, AWS charges between $7.57 and $12.29 per H100 GPU hour in their p5.48xlarge instances.9

But Lambda's differentiation goes beyond pricing. The company's infrastructure includes non-blocking InfiniBand networking with 3.2Tb/s bandwidth, GPUDirect RDMA for zero-CPU overhead in GPU-to-GPU communication, and one-click deployment of clusters ranging from 16 to over 1,500 GPUs.10 This isn't incremental optimization — it's infrastructure designed for a fundamentally different computing paradigm.

The Open-Source Accelerant

The January 2025 release of DeepSeek-R1 marked an inflection point for Lambda's strategy. The open-source reasoning model, which matched OpenAI's capabilities at a fraction of the cost, triggered what Lambda executives described as unprecedented demand for GPU capacity.

"Lambda is really well positioned as a company to take advantage of open source AI models like DeepSeek-R1," Balaban told CNBC, "because we have well over 25,000 GPUs on our cloud platform that can be readily repurposed to host these open source models."11

Lambda's platform can serve DeepSeek R1 at over 20,000 tokens per second — performance levels that enable real-time applications previously impossible with proprietary models.12 This capability reflects a deeper thesis about AI's evolution: as models become commoditized through open source, competitive advantage shifts to infrastructure providers who can deliver compute most efficiently.

"Over the last twelve months, AI has become more democratized because of two forces: open source and LLM reasoning," Balaban stated in the Series D announcement. "Open-source reasoning models allow anybody on the planet to contribute to the progress of AI and no company is better positioned than Lambda to provide that compute."13

Infrastructure for an Uncertain Future

Balaban's public statements reveal an unusual mix of technological optimism and existential concern about AI's trajectory. In a February 2024 tweet, he wrote: "This is the strongest evidence that I've seen so far that we will achieve AGI with just scaling compute. It's genuinely starting to concern me. I used to think that we would run into roadblocks: end of scaling laws, maybe we don't have the right model architecture, power density."14

This belief that AGI might arrive through brute-force scaling drives Lambda's infrastructure strategy. The company isn't just building for today's GPT models but for systems that might require orders of magnitude more compute.

Lambda's Texas expansion illustrates this forward-looking approach. The company is partnering with Aligned Data Centers on a $700 million, 425,000-square-foot facility in Plano, designed specifically for liquid-cooled, highest-density GPU deployments.15 Even more ambitious is Lambda's role as anchor tenant in ECL's groundbreaking Houston project: the world's first fully sustainable 1-gigawatt data center powered entirely by hydrogen, representing an $8 billion total investment.16

"Every presentation, every piece of sales collateral, every advertisement, every video game, you're going to start to see more and more AI compute embedded in those things," Balaban explained on Bloomberg in March 2025, "which means that demand is going to continue to grow more than exponentially."17

The Business Model of Revolution

Lambda's financial performance suggests their contrarian strategy is working. "Last year we shipped over $400 million of top line revenue," Balaban revealed in February 2025. "We are profitable. That is to say we have positive operating cash flow, positive EBITDA."18

This profitability while scaling aggressively defies conventional wisdom about infrastructure businesses. The company has raised $863 million in equity funding,19 supplemented by a $500 million debt facility from Macquarie Group specifically for GPU purchases.20 The February 2025 Series D, co-led by Andra Capital and SGW with participation from NVIDIA, Andrej Karpathy, and In-Q-Tel, valued Lambda at $2.5 billion.21

The customer roster validates Lambda's approach: over 5,000 organizations including Microsoft, Amazon Research, Kaiser Permanente, Stanford, Harvard, and the Department of Defense.22 These aren't experimental deployments but production workloads from organizations with alternatives.

"Our platform is fully verticalized, meaning we can pass dramatic cost savings to end users compared to other providers," explained Robert Brooks, Lambda's VP of Revenue.23

The Software Extinction Event

For Balaban, the current AI boom represents something more profound than a new computing platform — it's the beginning of software's end as we know it.

"As a software engineer today I can ask it to generate a full video game. I can ask it to generate, for example, the Lambda chat iOS application and it generates it in one shot with no errors," he told Bloomberg Technology in February 2025.24

This capability, which Balaban calls "one-shotting," suggests a future where traditional software development becomes obsolete: "AI is replacing all the traditional software that's been developed over the last 50 years and replacing it with a hybrid sort of human and neural software hybrid. And that is a big deal."

If this thesis proves correct, Lambda's focused approach positions them to capture disproportionate value in a transformed industry. The company's infrastructure isn't just optimized for training today's models — it's designed for a world where AI generation replaces human programming entirely.

The Hundred-Year Bet

Lambda's strategy represents a specific vision of AI's future: one where specialized infrastructure trumps general-purpose platforms, where open-source models commoditize intelligence, and where compute becomes as fundamental as electricity. The company is literally building for "superintelligence" — not as metaphor but as engineering specification.

"AI is fundamentally restructuring our economy," Balaban stated in the funding announcement. "Lambda is investing billions of dollars to build the software platform and infrastructure powering AI. We build software tools that delight the AI Developer, and a platform that truly puts AI into the hands of the many."25

The upcoming deployment of NVIDIA's GB300 NVL72 systems, which Lambda claims will deliver "50x higher reasoning model inference output compared to NVIDIA Hopper,"26 suggests the company's infrastructure advantage might actually widen as AI models become more sophisticated.

For investors, Lambda presents a clear thesis: if AI development continues its current trajectory, specialized infrastructure providers will capture outsized value. The company's profitable growth at $400 million revenue validates the near-term opportunity. The longer-term question is whether Balaban's vision of ubiquitous AI — "one person, one GPU" — represents prescient positioning.

"one person, one GPU" - Stephen Balaban

What's certain is that Lambda has already achieved something remarkable: forcing the cloud giants to respond. When a thirteen-year-old startup can undercut AWS by 80% on GPU pricing while maintaining profitability, it suggests the rules of infrastructure competition are changing. Lambda has already proven that the cloud computing playbook needs rewriting for the age of artificial intelligence.

The superintelligence cloud might sound like science fiction. But with billions in infrastructure, thousands of customers, and a profitable business model, Lambda is building it anyway. In a world racing toward AGI, that might be the only strategy that matters.

Lambda is in talks about a funding round that could value the cloud infrastructure company at $4B to $5B ahead of a potential IPO by the end of 2025.27

Roberto Veronese is an investor and LP in Alumni Ventures, which participated in Lambda's funding rounds.

Bloomberg Technology interview with Stephen Balaban, February 24, 2025

Lambda.ai/about, company website (August 2025)

TechCrunch, "Following Facebook's Shut Down Of Face.com's Facial Recognition API, Lambda Labs Debuts An Open Source Alternative," September 4, 2012

Lambda Series D funding announcement, February 19, 2025

Bloomberg Technology interview with Stephen Balaban, February 24, 2025

Lambda.ai/hyperscale, company website (August 2025)

The Next Platform interview with Stephen Balaban, 2021

Lambda.ai/service/gpu-cloud/1-click-clusters, accessed August 2025

AWS EC2 P5 instance pricing documentation, accessed August 2025

Lambda.ai/service/gpu-cloud/private-cloud, accessed August 2025

CNBC, "AI cloud startup Lambda raises $480 million in new round," February 19, 2025

Lambda.ai/service/gpu-cloud/1-click-clusters, accessed August 2025

Lambda Series D funding announcement, February 19, 2025

Stephen Balaban Twitter/X post, February 2024 (@stephenbalaban)

The Dallas Morning News, "Nvidia-backed AI computing firm has plans for $700M Plano data center," May 7, 2025

Business Wire, "ECL Announces World's First 1 Gigawatt Off-Grid, Hydrogen-Powered AI Factory Data Center," September 25, 2024

Bloomberg Technology interview with Stephen Balaban, March 31, 2025

Bloomberg Technology interview with Stephen Balaban, February 24, 2025

Sacra Research, "Lambda Labs revenue, valuation & growth rate," 2025

Ibid.

Data Center Dynamics, "AI cloud Lambda raises $480m," February 19, 2025

VentureBeat, "Lambda launches 'inference-as-a-service' API," December 12, 2024

Bloomberg Technology interview with Stephen Balaban, February 24, 2025

Lambda Series D funding announcement, February 19, 2025

Lambda.ai, company website homepage (August 2025)